on a small, manageable representation of text embeddings

Originally posted on Mastodon.

I finally got out of a bit of a depressive lull and funk for coding things for fun, and I was able to make something cute that I think is possibly even useful.

I've been playing with #LLM text #embedding #models.

I've been interested in using them as a tool to filter long lists of text into a shorter list that I can process myself.

Specific example: I wanted to find all recently published papers in a certain topic area from a journal in my field. There's ~300 papers. Only ~10 are relevant.

For my first attempt, I hand picked ~5 papers out of ~100 papers. I then used the paper's title, author list, abstract, and keywords to get a text representation of the paper.

I knew that I wanted to try applying Content Defined Chunking to the problem, to split a long text into more manageable pieces.

However, I didn't know the optimal chunking size, so I tried multiple strategies and weighted them by their length.

For instance, chunks of 32 chars at 0.25 weight vs 256 char at 0.75 weight.

The result of this experiment is in this Google Colab notebook: https://gist.github.com/player1537/c5970698349ec635c361e92321f2ca1c

I was ultimately able to produce a list of around 40 papers that I should look more closely at. I'm still looking through these, but this is much better than the ~300 I started with.

While working on this, I realized that it'd be nice to be able to share a URL to someone of that point in a semantic embedding space.

The challenge: the smallest embedding models yield vectors of size 384. Naively, this can be encoded as a very, very large URL, but not something small enough that a human could feasibly type it themselves.

So, I wondered: could you embed a quantized version of the vector? What quantization would work: 8 bit? smaller? even 1 bit?

There's an interesting property that actually makes 1 bit encoding unique.

First, consider that when doing cosine similarity of embedding vectors, you first normalize the incoming vectors, then do a dot product.

Then, consider that encoding 0 bits as negative and 1 bits as positive means that you can use bitwise operators to do the dot product.

I coded that idea: https://gist.github.com/player1537/cf5dc8853ccfe4767660e703d06d6a1e

Then, how to encode as a string? Well, we're already comfortable with UUIDs at 128 bits encoded as ~36 hex characters, so encoding 384 bits as ~64 base64 characters isn't much worse.

The encoding process is also in that notebook

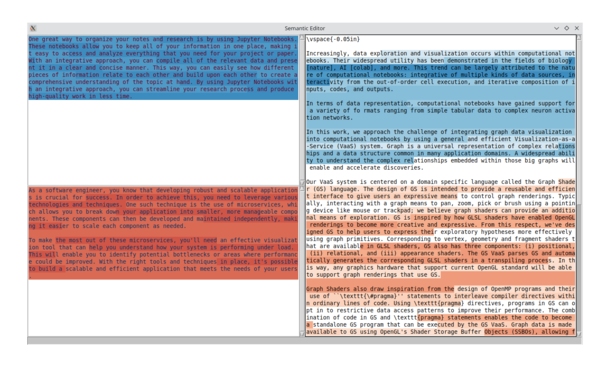

The punchline: this method allows you to represent the text “AdaVis: Adaptive and Explainable Visualization Recommendation for Tabular Data” as the embedding:

8eG2V2UQTNVqfa+mG/2zpGRaokJ00yr5b0ww6zybFrzLK2F2XepPCBpseCQnDopE

which is small enough to be manageable imo!